Development Cycles & Benefits

This doc is older and may not align with our brand standards

The Cycle

Core system areas

- Data pipeline

- Human supervision for AI

- Team communication and administration

Diffgram Benefits

| Feature | Diffgram | No system | Improvement |

|---|---|---|---|

| Frequency of Retraining | Hourly, Daily, or Weekly | 2-6+ months | >72x |

| Number of Datasets | 100s, 1000s. | 1-10 | >100x |

| Near real time options | Yes | No | 0 -> 1 |

Diffgram shifts these concepts to become functions of your system (and level of integration with Diffgram). Experiment with many datasets and combinations. Improve performance by fine tuning sets for each store/location/sub system.

The net effect is that it enables the Data Science team to scale deep learning products. A system can ship with the human fall back (near real time option). Then as new stores and hard label cases are found datasets are split and only the rare, hard cases worked on.

Your system can undergo less initial evaluation - worries about ongoing performance are reduced because there is a clear path to continued retraining.

Development & Operation Cycles

Lean MLOps

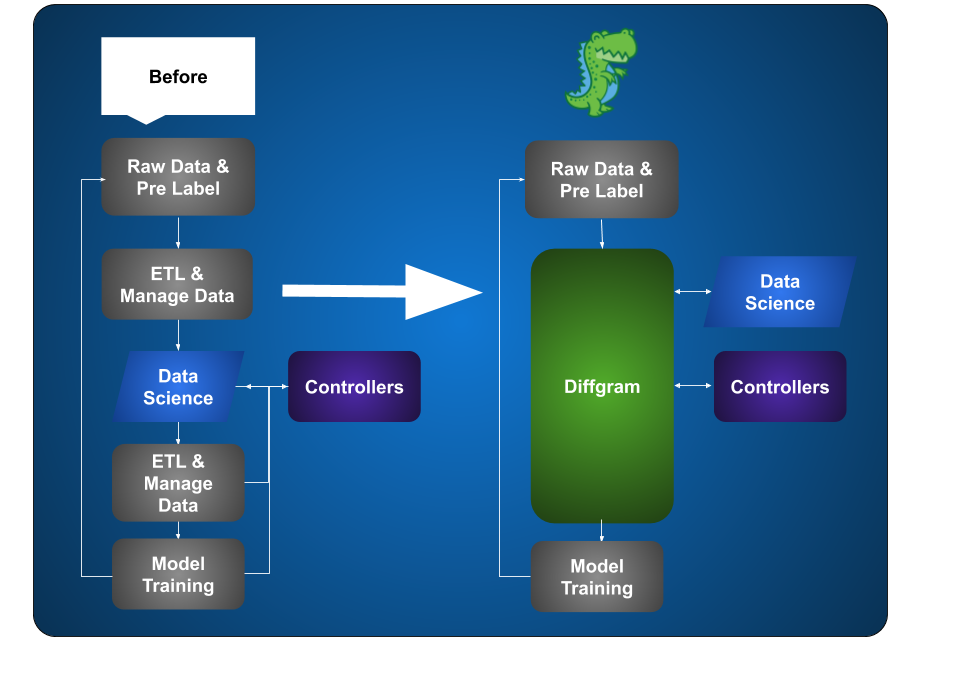

New Paradigm

Diffgram does not provide any model evaluation or deploy. This diagram is provided to speak to the overall mindset and mental concepts at play.

| Area of Focus | New | Old | Trade offs |

|---|---|---|---|

| Model training & optimization | Tight connection to training data. Expectation that model will be regularly retrained Training data Input/Output is part of core application. | Manual data input/output Manual organization Training is "one off" | Pros Performance becomes primarily a function of training data Better performance Better path to fix data issues Cons Requires a system (like Diffgram). Requires more engineering effort in general |

| Model evaluation | Set conditions for valid automatic deploy (ie above a threshold) Sliding window approach on data for statistical evaluation methods, ie last 30, 60, or 180 days of data Strong human integration. In addition to statistical analysis stronger “one off” and reasonableness checks. | Manually assess validity, ie looking at an Area under a Curve chart or a Confusion Matrix | Pros Can maintain same rigor in terms of statistical checks, with tighter control on data relevancy. Supports high frequency retraining and deploy often Cons Requires more engineering effort in general |

| Model deployment | Deploy early in fail-safe way. ie Starting as internal recommendations only Deploy often with human in the loop Heuristics to check for correctness | Mental model of trying to get a desired level of accuracy prior to deploying. | Pros: Projects become unstuck, no longer trying to achieve "perfect" Projects more closely align with real world data Reduces costs by shipping sooner and improves performance Cons: Requires clear communication on expected failure modes Increases operational effort. |

Streaming Data - No Static Datasets

In this new paradigm there are no real "datasets" in the sense that the data is only static for the exact moment of training and evaluation. Data becomes more like a dynamic "channel" in that we have a continuous stream of new training data and it's using a conditional slice of it. The initial training process is effectively just a larger slice of the ongoing stream.

Updated over 4 years ago