Export Format

Guide has general information

For Instance specific details see the specific media type

ie Box

Introduction

Overview

Exports are a primary means of getting the training data to your deep learning model. Exporting is a part of normal operations.

Exports are generated as a long running operation. Each export is frozen in time. For example if the label is named "Cat" when exported, and then later changed to "Dog", the export will be unaffected. In general the system preserves IDs however, so it's possible to reuse old exports in some cases.

The easiest way to get started is through the UI. Integration options are great for at scale work. Exports created programmatically show up on the UI too, for example a data science team can access the data through the UI for ones offs, without requiring separate coding setup.

From the UI

- Project -> Export

- Generate.

- Click the download button (once button is available)

From the SDK or API

See the technical reference section.

UI Defaults to Completed Files

By default, exports created through the UI default to only completed files.

To export all files uncheck the option in the "more" menu.

Key Point: Exports are Generated on demand.

Because data is constantly changing, the exports are created on demand. That means by default even a project with say a completed job or completed file etc does not have any exports. A job may be completed, and then corrections are made after the fact, forcing a regeneration of the data. It's entirely up to you when you generate exports.

Export by Job to avoid massive files

Exporting an entire directory can create very large files. Besides taking a while to run, this can be ungainly to inspect in normal IDEs. Choosing a job as a source allows you to export batches of work in the same context as a job.

File Type Formats

Annotations (JSON / YAML)

Annotations only and links to raw data.

Note, this document provides examples in JSON. YAML files follow a similar format.

TF Records

Beta feature

TFRecords is supported for images files only at this time.

Both raw data and encoded meaning (Annotations). It's designed to work with tensorflow.

Export Source

Work can be exported from: directory , job, or task

The overall file is structured as:

{

"label_map": { },

"export_info": {

"id": 563,

"file_list_length": 85

...

},

"attribute_groups_reference": [ ],

}

With the remainder of the keys being the actual annotations.

Coordinate System

Width & Height Transformations.

The video/image objects include both a width and height. These are the values corresponding to x/y in the annotations. The annotations are stored with absolute values in relation to the declared width/height. The width height may be a transformation or may the same as the original. The exact file annotated is provided, and may be used with the absolute coordinates.

Transformation to Relative.

During computation there is often a desire to transform to relative coordinates, ie so a network can predict a float 0 -> 1. This transformation may happen regardless of whether the original file was transformed by Diffgram. Therefore for ease we always provide the width and height of the file since often different files will have different values even with the same directory.

The transformation to relative coordinates is defined as

x / width and y / height

For example:

A point x,y (120, 90) with a width/height (1280, 720) would have a relative value of

120/1280 and 90/720 or (0.09375, 0.125)

Trade-offs

While there's various trade offs of both formats, we find storing absolute values to be easy to reason about from the human perspective. And less prone to unnoticeable subtle rounding errors. For example 871 is cleaner to read then 0.2047484720263282. And has more clearly changed to 872 vs 0.2049835448989187.

Label Format

Label map

The label map has a key value pair of label_file_id : name

"110443": "your_label",

"134923": "apples"

}

Image Format

Images contain an instance_list. Each instance has a label_file_id.

"4562": {

"file": {

"id": 17640522

},

"image": {

"width": 1280,

"height": 720,

"original_filename": "super.png"

},

"instance_list": [ ]

}

The key of the dictionary corresponds to the file_id.

Video Format

Overview

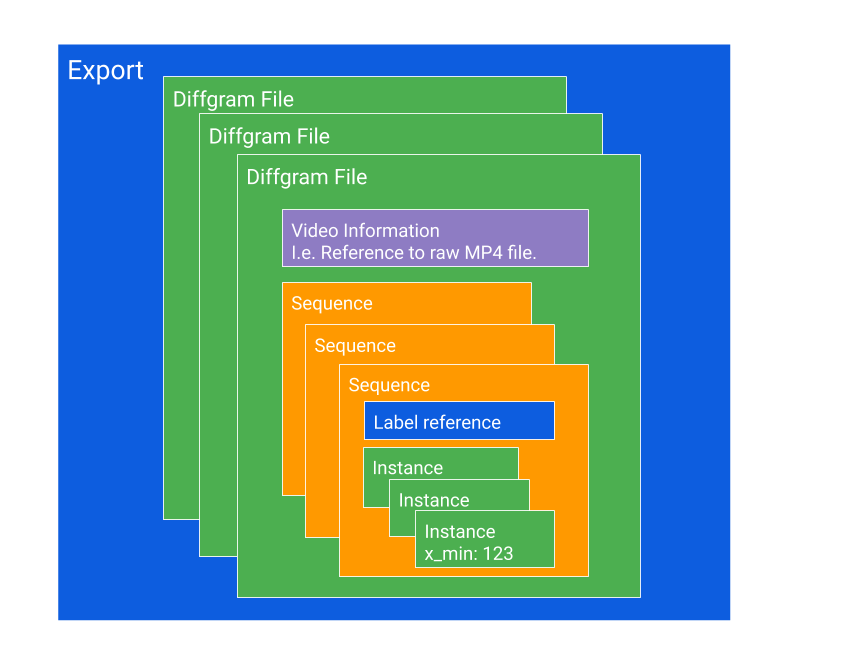

In general, the export contains dictionaries representing an exported version of Diffgram Files.

Each Diffgram File contains information about sequences, and sequences in turn contain information about Instances.

File > Sequence > Instance

For example, each row displayed in the UI here corresponds to a Sequence:

More on how sequences are created.

General

Example:

"489222": {

"file": {

"original_filename": "example",

"created_time": "2020-11-20 11:17:50.395532"

},

"video": {

"width": 1280,

"height": 720,

"frame_rate": 30,

"frame_count": 12,

"original_fps": 60,

"fps_conversion_ratio": .5

"mp4_video_signed_url": "https:...",

"mp4_video_signed_expiry": 1572384115

},

"sequence_list": []

}

The mp4_video_signed_url is useful to get the processed video. If for example the frame rate was converted from an original source, then this video will match the frame numbers with no further processing needed.

Otherwise the frame_rate and fps_conversion_ratio can be used to map it to the original file.

The key of the dictionary corresponds to the file_id.

Note the Diffgram File dict only contains "metadata" style information about the file, the entire dict including the file, video, and sequence_list keys together make up the "Diffgram File".

Signed URLs Expire after 21 days

The default expiry of the signed_url is promised to be at least 21 days from date of generation (and may be longer). The expiry is for security because the exported file in conjunction with the raw data link is very sensitive. The grace period is so the file can be reasonably used without having to regenerate.

Applies to Exports generated after Jan 29, 2020

Video Sequence

Each video has a sequence list.

Each sequence includes general information such as the unique id, the label_file_id for that sequence. The label_file_id is the same for the entire sequence, however each instance may have independent attribute groups.

Example:

{

"id": 1247922, # sequence id

"label_file_id": 12104423,

"number": 1,

"keyframe_list": [

0,

3

],

"instance_list": [

{

"type": "box",

"frame_number": 0,

"x_min": 948,

"y_min": 413,

"x_max": 1090,

"y_max": 501,

"attribute_groups": null,

"interpolated": null,

"points": [ ],

"mask": null

},

{ },

{ },

{

"type": "box",

"frame_number": 2,

"x_min": 922,

"y_min": 410,

"x_max": 1083,

"y_max": 510,

"attribute_groups": null,

"interpolated": true,

"points": [ ],

"mask": null

}

]

}

For example a car is a car in frame 0, 2, 5 etc.

However, the car may be occluded in say frame 1, and 3, the occlusion can be represented in an attribute group, per instance (per frame).

If the car transforms into a bus at frame 6, then this could be represented by creating a new bus sequence at frame 6. Although hopefully that's a rare occurrence. :)

Sequence Considerations

- Sequences may have different

label_file_ids. Therefore one file may have many sequences with the samenumber. Conversely (label_file_idandnumber) is generally unique within a sequence list. - Order is not preserved with the dicts in instance list.

- In general the

keyframe_listis a shorthand for human annotated frames. ie Where bothinterpolatedandmachine_madeareFalse. In generalkeyframe_listis sorted from smallest to largest. - In the case of any difference between the

keyframe_listand theinstance_listtheinstance_listis considered correct. The keyframe list is maintained as a convenience for the UI. Theinstance_listis the canonical source.

Global frame number

Beta feature

Motivation

File splits are a normal part of annotation. Diffgram offers beta support for handling the splits and/or FPS conversions. Motivations to do splits include more manageable duration for Quality Assurance and easier computation. Some teams handle these splits internally.

For example, taking a 2 hour video and splitting into 120, 60 second, clips.

This leads to a problem though, if we have a single video split into 10 parts, what does frame 5 in clip 8 mean in relation to the original video?

Given there is also sometimes a desire to reduce the frame rate this can become even more confusing!

Further, you may have some video that's split and some that's not.

This makes what at first may seem like an easy transformation somewhat non trivial to do after the fact.

At least two ways to approach this:

- Use the global_frame_number. The frame in the context of the original video.

- Use our provided split clips in your ongoing work. (Link to the file is included as part of the video export). Since the clip frame number corresponds to the exact frame being annotated all transformations are already "included".

Instances may have a global_frame_number.

The transformation is:

(Starting offset in seconds * original FPS)

+ ( frame_number * FPS conversion rate)

For example if a clip starts at 240 seconds and has an FPS of 60,

frame 0 will be 14,400.

- The frame numbers are 0 indexed. So a video with a count of 10 frames will have 0 -> 9 frame numbers.

- The keyframe_list is excluded from this, it is always assumed to be "local".

Potential Transformation Errors

If the original frame rate or the conversion rate is an odd number then the conversion rate will be odd. ie 30 FPS and conversion rate of 7.

When doing the global_frame transformation, the result is rounded to the nearest whole frame number. This is unlikely to be a significant issue, but can be avoided error by having both an even frame rate video and an even conversion rate. ie 60 FPS video and setting conversion to 30.

New feature

Note, this feature is only available to files added after Jan 16, 2020.

Masks

'image/segmentation/class/encoded: bytes,

'image/segmentation/class/format': bytes, (default 'png')

The joint mask is encoded in the image/segmentation/class/encoded

Where for each pixel:

255 == background

0 up to 254 == classes

Updated about 2 years ago